A toolkit to explore ethical complexities of AI Therapy for Mental Health with AI Developers & Future Elderly Users.

The Problem

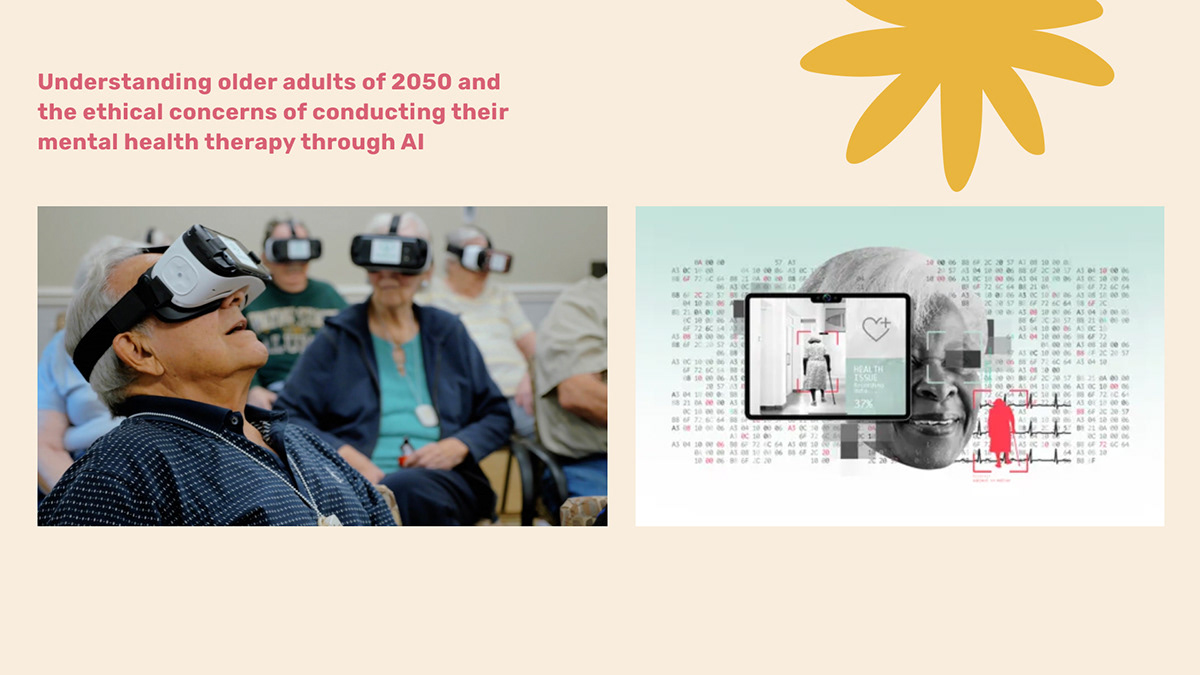

Desktop research led to understand that millennials and GenZ today may be the users of AI therapy as older adults by 2050. Loneliness, cultural and tech isolation and stigmatisation of mental health problems may be individual problems of the future as much as they are in the present. Consequently, given the current pace and regulations of AI development, there may be certain ethical concerns when it is used to derive personal information of individuals and their interaction and support. These ethical concerns include autonomy, privacy, fairness and bias among others. These concerns on human and technological levels collectively require a more collaboration among stakeholders responsible and involved in developing this technology, and therefore highlights the need to create a toolkit encouraging conversation and imagining the future.

Desktop research led to understand that millennials and GenZ today may be the users of AI therapy as older adults by 2050. Loneliness, cultural and tech isolation and stigmatisation of mental health problems may be individual problems of the future as much as they are in the present. Consequently, given the current pace and regulations of AI development, there may be certain ethical concerns when it is used to derive personal information of individuals and their interaction and support. These ethical concerns include autonomy, privacy, fairness and bias among others. These concerns on human and technological levels collectively require a more collaboration among stakeholders responsible and involved in developing this technology, and therefore highlights the need to create a toolkit encouraging conversation and imagining the future.

User Centring

Following the design justice framework, the user centring allowed us to identify stakeholders who may be directly or indirectly impacted and may be left out. While the direct users of AI Therapy in 2050 shall be older adults, the system includes healthcare facilities that may provide space for AI Therapy, psychology experts - whose research informs AI, friends and family members of older adults facing mental health issues. In addition, a brainstorm followed by desktop research also informed us of entities that may be a part of the system, however may not evidently be considered - such as pet stores, care givers’ families, future generations, entertainment, social media, among others. This mapping helped us identify core stakeholders and to design the toolkit that included world-building and imagining the future.

Following the design justice framework, the user centring allowed us to identify stakeholders who may be directly or indirectly impacted and may be left out. While the direct users of AI Therapy in 2050 shall be older adults, the system includes healthcare facilities that may provide space for AI Therapy, psychology experts - whose research informs AI, friends and family members of older adults facing mental health issues. In addition, a brainstorm followed by desktop research also informed us of entities that may be a part of the system, however may not evidently be considered - such as pet stores, care givers’ families, future generations, entertainment, social media, among others. This mapping helped us identify core stakeholders and to design the toolkit that included world-building and imagining the future.

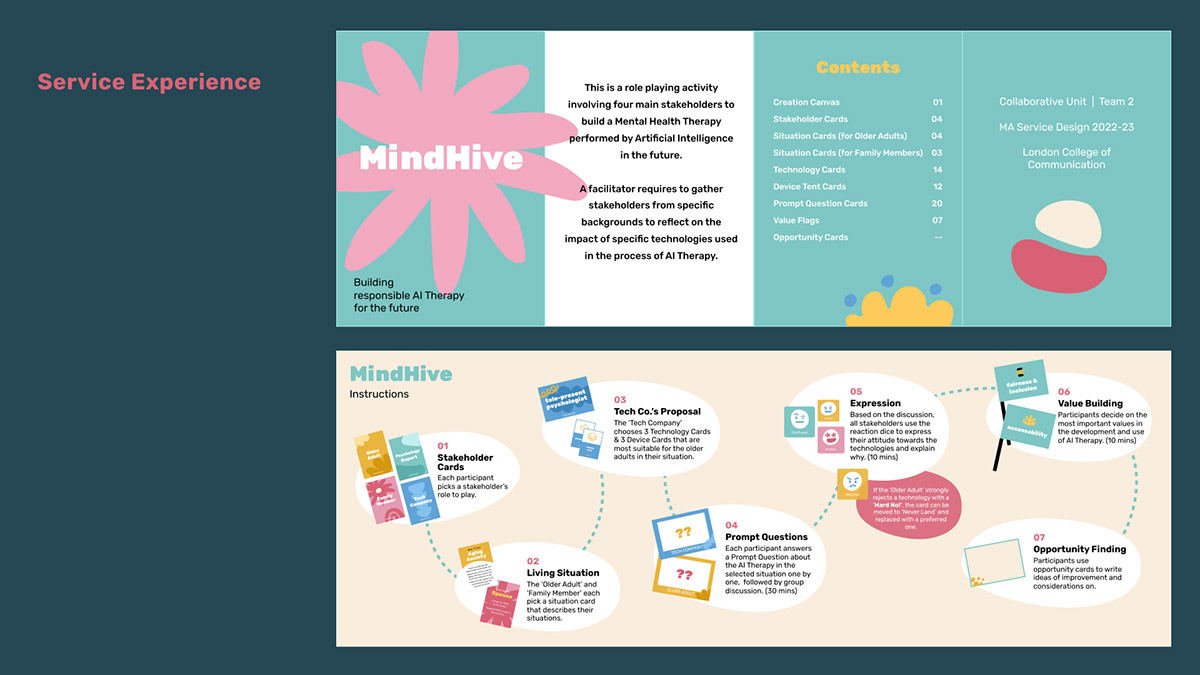

This service in the form of a toolkit focuses on three core actions - empathy by co-building the future, conversation by exploring the complex system with leverage points and value building through in-depth discussions on AI ethics.

The toolkit exists online as well as in a physical form consisting of a board, stakeholder roles, reaction dice, ethical values, technology cards, prompt questions and some sticky notes. It can be conducted by a facilitator and among psychology experts, older adults or anyone roleplaying an older adult from 2050, family member of older adults and AI or technology professional. The toolkit also comes with instructions for facilitators and users.

The objective of the toolkit is to encourage collaboration among stakeholders and consider AI ethics in the present in order to develop a more inclusive and safe AI therapy in the future, through world building and discussion.

The objective of the toolkit is to encourage collaboration among stakeholders and consider AI ethics in the present in order to develop a more inclusive and safe AI therapy in the future, through world building and discussion.

Research through Design

The workshop sessions followed 3 of the 10 Design Justice Principles (2018) to facilitate the participants to build & reflect upon a future scenario together -

2. Center the voices of those who are directly impacted by the outcomes of the design process.

The workshop sessions followed 3 of the 10 Design Justice Principles (2018) to facilitate the participants to build & reflect upon a future scenario together -

2. Center the voices of those who are directly impacted by the outcomes of the design process.

4. View change as emergent from an accountable, accessible, and collaborative process, rather than as a point at the end of a process.

6. Believe that everyone is an expert based on their own lived experience, and that we all have unique and brilliant contributions to bring to a design process.

The iterations led to developing the final toolkit which was further tested online with industry professionals and real stakeholders.

6. Believe that everyone is an expert based on their own lived experience, and that we all have unique and brilliant contributions to bring to a design process.

The iterations led to developing the final toolkit which was further tested online with industry professionals and real stakeholders.

In order to test the toolkit with real stakeholders, we held an online workshop. The participants included individuals from technology and psychology background, as well as someone with personal experience in caring for their elderly relatives. In addition, we also have a millennial take the role of future older adult. I facilitated this workshop, taking the participants through the steps and conducting the interactions while other team members worked on the logistics and documentation. The participants offered a wealth of knowledge and expertise, making for a valuable workshop.

Stakeholder Feedback

“It will be more helpful to imagine the situation if a video or more specific story is provided.”

Paxton, Student, CCI - London, UK

OLDER ADULT

“Too many technology and devices cards may make participants feel overwhelmed and distracted.”

Ryan, IT Consultant - Derby, UK

TECH COMPANY

“This workshop may help service receivers gain more awareness about the topic of ethics in therapy.”

Abhishek, Entrepreneur - New Delhi, India

FAMILY MEMBER

“I had so much input to provide. I wish there was more space and time for expert opinions to be added.”

Nidhi, Mental Health Counsellor - Bangalore, India

PSYCHOLOGY EXPERT

“It will be more helpful to imagine the situation if a video or more specific story is provided.”

Paxton, Student, CCI - London, UK

OLDER ADULT

“Too many technology and devices cards may make participants feel overwhelmed and distracted.”

Ryan, IT Consultant - Derby, UK

TECH COMPANY

“This workshop may help service receivers gain more awareness about the topic of ethics in therapy.”

Abhishek, Entrepreneur - New Delhi, India

FAMILY MEMBER

“I had so much input to provide. I wish there was more space and time for expert opinions to be added.”

Nidhi, Mental Health Counsellor - Bangalore, India

PSYCHOLOGY EXPERT

Conclusion & Realisation

With multiple design critique sessions and testing the final prototype once with real stakeholders, this service design project concluded in the duration of the Collaborative Unit in the MA Service Design program. The project requires a few steps following the last round of testing - building a facilitator’s guide in order to help participants break ice and track the time in discussions, and improve the mechanism by providing more space in this activity for psychology experts over tech and AI experts.

As a team of 5 students, we had our collective learning and understand of the ethical principles needed to develop and regulate artificial intelligence. Our Ways of Working lead us to voice our perspectives as we were a group of contrasting personalities, understand and accept our roles within the team in the duration of the project, and conduct Research through Design, eventually discussing this toolkit in a show and tell session with our faculty. Personally, this project led me to realise for the first time the question - where does service design end? The design process is such that if we had the capacity, we could continue to further test the toolkit with more users and stakeholders, take more and diverse feedback and iterate further.

With multiple design critique sessions and testing the final prototype once with real stakeholders, this service design project concluded in the duration of the Collaborative Unit in the MA Service Design program. The project requires a few steps following the last round of testing - building a facilitator’s guide in order to help participants break ice and track the time in discussions, and improve the mechanism by providing more space in this activity for psychology experts over tech and AI experts.

As a team of 5 students, we had our collective learning and understand of the ethical principles needed to develop and regulate artificial intelligence. Our Ways of Working lead us to voice our perspectives as we were a group of contrasting personalities, understand and accept our roles within the team in the duration of the project, and conduct Research through Design, eventually discussing this toolkit in a show and tell session with our faculty. Personally, this project led me to realise for the first time the question - where does service design end? The design process is such that if we had the capacity, we could continue to further test the toolkit with more users and stakeholders, take more and diverse feedback and iterate further.

THANK YOU!